Gemma explained: What’s new in Gemma 3

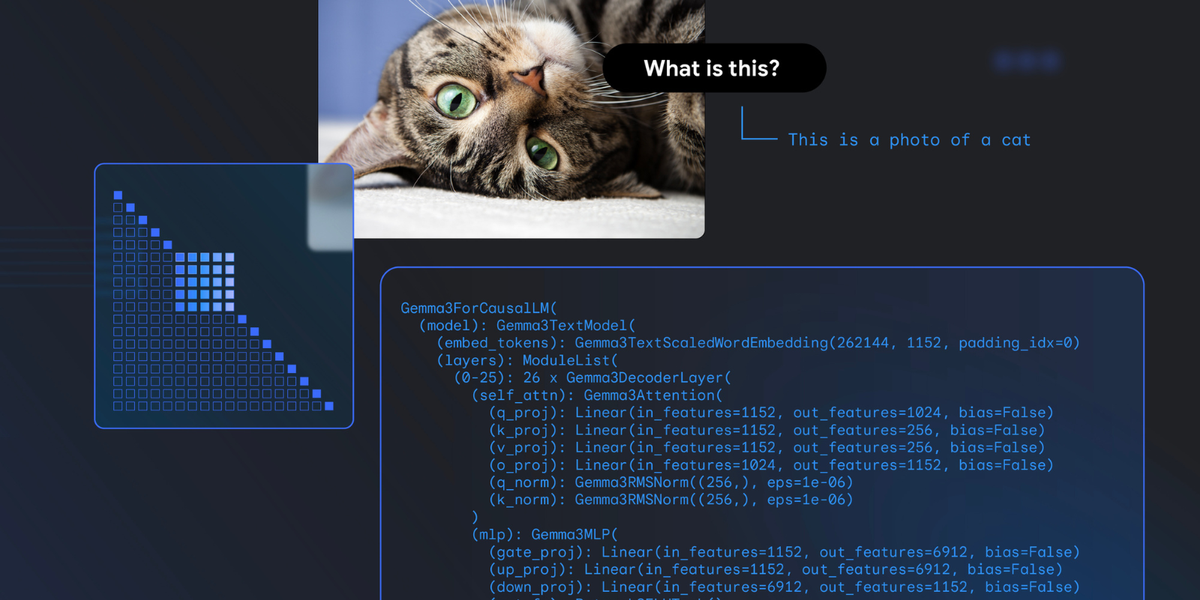

Gemma 3’s new features include vision-language capabilities and architectural changes for improved memory efficiency and longer context handling compared to previous Gemma models.

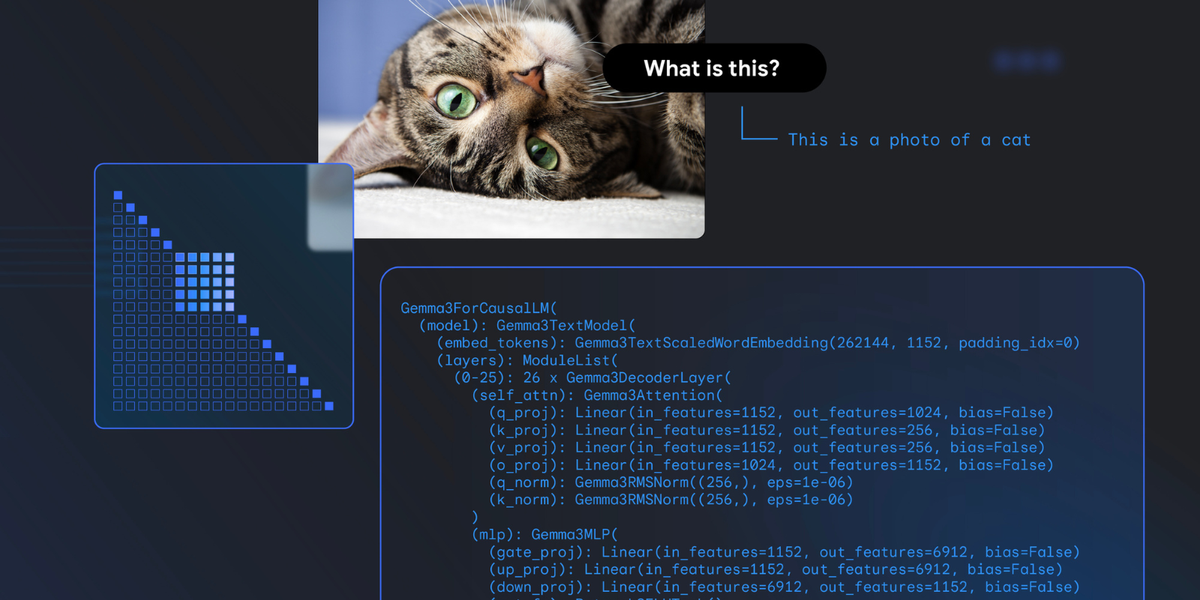

Gemma3ForConditionalGeneration( (vision_tower): SiglipVisionModel( (vision_model): SiglipVisionTransformer( (embeddings): SiglipVisionEmbeddings( (patch_embedding): Conv2d(3, 1152, kernel_size=(14, 14), stride=(14, 14), padding=valid) (position_embedding): Embedding(4096, 1152) ) (encoder): SiglipEncoder( (layers): ModuleList( (0-26): 27 x SiglipEncoderLayer( (self_attn): SiglipSdpaAttention( (k_proj): Linear(in_features=1152, out_features=1152, bias=True) (v_proj): Linear(in_features=1152, out_features=1152, bias=True) (q_proj): Linear(in_features=1152, out_features=1152, bias=True) (out_proj): Linear(in_features=1152, out_features=1152, bias=True) ) (layer_norm1): LayerNorm((1152,), eps=1e-06, elementwise_affine=True) (mlp): SiglipMLP( (activation_fn): PytorchGELUTanh() (fc1): Linear(in_features=1152, out_features=4304, bias=True) (fc2): Linear(in_features=4304, out_features=1152, bias=True) ) (layer_norm2): LayerNorm((1152,), eps=1e-06, elementwise_affine=True) ) ) ) (post_layernorm): LayerNorm((1152,), eps=1e-06, elementwise_affine=True) ) ) (multi_modal_projector): Gemma3MultiModalProjector( (mm_soft_emb_norm): Gemma3RMSNorm((1152,), eps=1e-06) (avg_pool): AvgPool2d(kernel_size=4, stride=4, padding=0) ) (language_model): Gemma3ForCausalLM( (model): Gemma3TextModel( (embed_tokens): Gemma3TextScaledWordEmbedding(262208, 5376, padding_idx=0) (layers): ModuleList( (0-61): 62 x Gemma3DecoderLayer( (self_attn): Gemma3Attention( (q_proj): Linear(in_features=5376, out_features=4096, bias=False) (k_proj): Linear(in_features=5376, out_features=2048, bias=False) (v_proj): Linear(in_features=5376, out_features=2048, bias=False) (o_proj): Linear(in_features=4096, out_features=5376, bias=False) (q_norm): Gemma3RMSNorm((128,), eps=1e-06) (k_norm): Gemma3RMSNorm((128,), eps=1e-06) ) (mlp): Gemma3MLP( (gate_proj): Linear(in_features=5376, out_features=21504, bias=False) (up_proj): Linear(in_features=5376, out_features=21504, bias=False) (down_proj): Linear(in_features=21504, out_features=5376, bias=False) (act_fn): PytorchGELUTanh() ) (input_layernorm): Gemma3RMSNorm((5376,), eps=1e-06) (post_attention_layernorm): Gemma3RMSNorm((5376,), eps=1e-06) (pre_feedforward_layernorm): Gemma3RMSNorm((5376,), eps=1e-06) (post_feedforward_layernorm): Gemma3RMSNorm((5376,), eps=1e-06) ) ) (norm): Gemma3RMSNorm((5376,), eps=1e-06) (rotary_emb): Gemma3RotaryEmbedding() (rotary_emb_local): Gemma3RotaryEmbedding() ) (lm_head): Linear(in_features=5376, out_features=262208, bias=False) ) )