An upgraded dev experience in Google AI Studio

Google AI Studio is the fastest place to start building with the Gemini API, with access to our most capable models, including Gemini 2.5 preview models, and generative media models like Imagen, Lyria RealTime, and Veo. At Google I/O, we announced new features to help you build and deploy complete applications, new model capabilities, and new features in the Google Gen AI SDK.

Build apps with Gemini 2.5 Pro code generation

Gemini 2.5 Pro is incredible at coding, so we’re excited to bring it to Google AI Studio’s native code editor. It’s tightly optimized with our Gen AI SDK so it’s easier to generate apps with a simple text, image, or video prompt. The new Build tab is now your gateway to quickly build and deploy AI-powered web apps. We’ve also launched new showcase examples to experiment with new models and more.

Video of text adventure game being generated with Imagen + Gemini

(video sped up for illustrative purposes)

In addition to app generation from a single prompt, you can continue to iterate your web app over chat. This allows you to make changes, view diffs, and even jump back to previous checkpoints to revert edits.

Compare code versions and use expanded file structure to help manage project development (video sped up for illustrative purposes)

You can also deploy those newly created apps in a single click to Cloud Run.

Quickly deploy your created apps on Cloud Run (video sped up for illustrative purposes)

Google AI Studio apps and generated code leverage a unique placeholder API key, allowing Google AI Studio to proxy all Gemini API calls. Consequently, when you share your app with Google AI Studio, all API usage by its users is attributed to their Google AI Studio free of charge usage, completely bypassing your own API key and quota. You can read more in our FAQ.

This feature is experimental, so you should always check code before sharing your project externally. Our one-shot generation has been primarily optimized to work with Gemini and Imagen models, with support for more models and tool calls coming soon.

Multimodal generation made easy in Google AI Studio

We’ve been working hard to get Google DeepMind’s advanced multimodal models into developers’ toolboxes, faster. The new Generate Media page centralizes the discovery of Imagen, Veo, Gemini with native image generation, and new native speech generation models. Plus, experience interactive music generation with Lyria RealTime with the PromptDJ apps built in Google AI Studio.

Explore our generative media models from the Google AI Studio Generate Media tab (video sped up for illustrative purposes)

New native audio for the Live API and text-to-speech (TTS)

With Gemini 2.5 Flash native audio dialog in preview in the Live API, the model now generates even more natural responses with support for over 30 voices. We’ve also added proactive audio so the model can distinguish between the speaker and background conversations, so it knows when to respond. This makes it possible for you to build conversational AI agents and experiences that feel more intuitive and natural.

Try native audio dialog in the Google AI Studio Stream tab (video sped up for illustrative purposes)

In addition to the Live API, we’ve announced Gemini 2.5 Pro and Flash previews for text-to-speech (TTS) that support native audio output. Now you can craft single and multi-speaker output with flexible control over delivery style.

Generate speech using the new TTS capabilities (video sped up for illustrative purposes)

Try native audio in the Live API from the Stream tab and experience new TTS capabilities via Generate Speech.

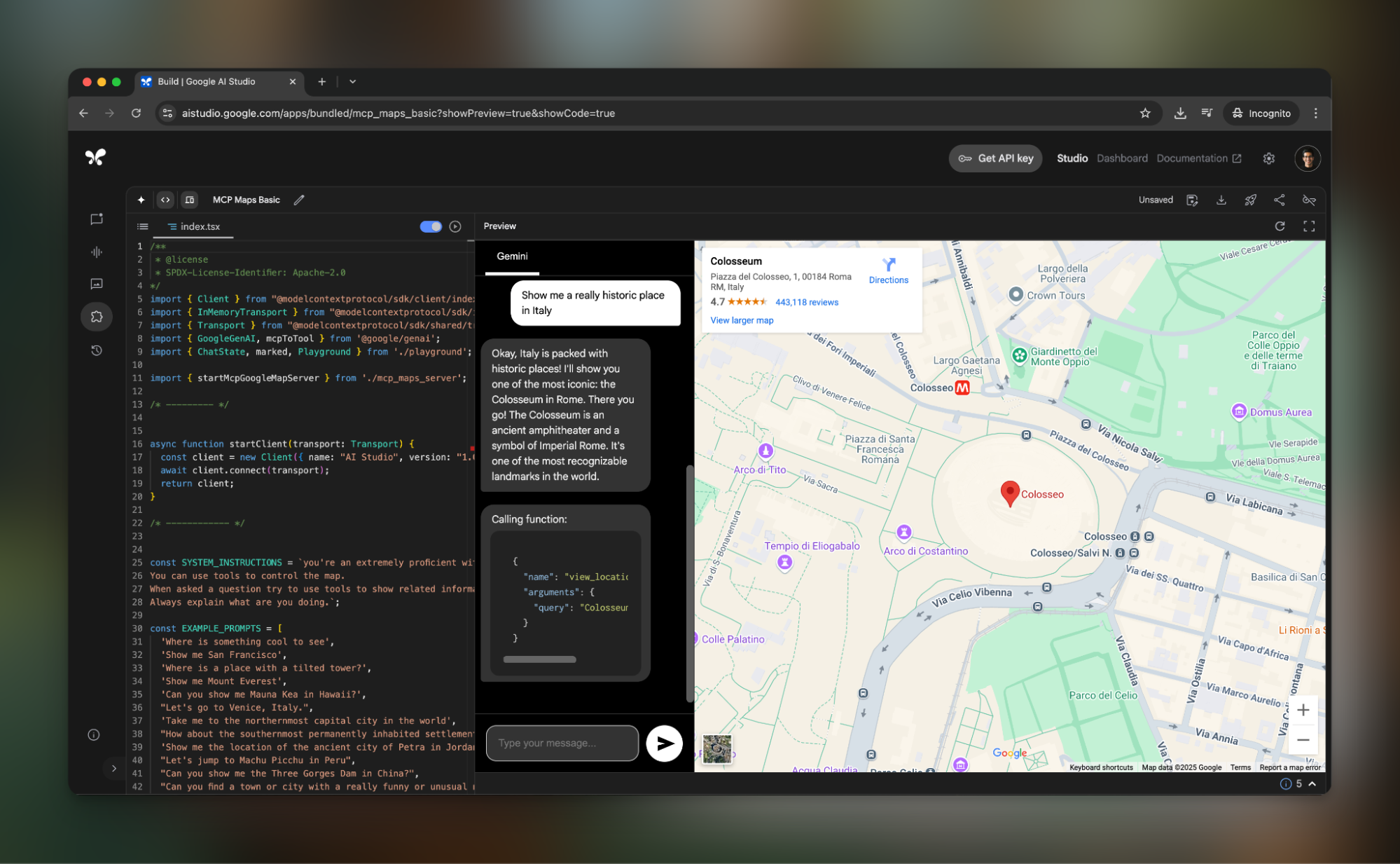

Model Context Protocol (MCP) support

Model Context Protocol (MCP) definitions are also now natively supported in the Google Gen AI SDK for easier integration with a growing number of open-source tools. We’ve included a demo app that shows how you can use an MCP server within Google AI Studio that combines Google Maps and the Gemini API

New URL Context tool

URL Context is a new experimental tool that gives the model the ability to retrieve and reference content from links you provide. This is helpful for fact-checking, comparison, summarization, and deeper research.

Start building in Google AI Studio

We’re thrilled to bring all of these updates to Google AI Studio, making it the place for developers to explore and build with the latest models Google has to offer.

Explore this announcement and all Google I/O 2025 updates on io.google starting May 22.